When reading the article Crunching 30 Years of NBA Data with MongoDB Aggregation I coulnd’t help but think that we’ve been enjoying aggregates in SQL for 3 or 4 decades already. When using PostgreSQL it’s even easy to actually add your own aggregates given the SQL command create aggregate.

The next step after thinking how obvious the queries written in the mentionned article would be to express in SQL was to actually load the data into PostgreSQL and write the aggregate queries, of course.

Table of Contents

Loading the data

With the help of a little bit of Common Lisp code and using the mongo-cl-driver it was easy enough to parse the given BSON file. What was more complex was to actually understand enough of the data model to produce a relational design out of it, avoiding data redundancy as much as possible.

We call that step normalization in old-style relational databases, and the goal of that process is to avoid functional dependency so that the data is easier to understand, verify and process the data once loaded.

For instance, rather than have both scores from each team and a column

won

per team, which would be a boolean but is a number in the given

BSON file,

we store only the scores. Here’s the main table definition of the stats we

are going to be playing with, the

game table:

create table nba.game (

id serial primary key,

date timestamptz,

host int references nba.team(id),

guest int references nba.team(id),

host_score int,

guest_score int

);

As much of the aggregates in the referenced article are playing with statistics from teams who actually won the game, let’s create a view to simplify our SQL queries thereafter:

create view winners as

select id,

date,

case when host_score > guest_score

then host

else guest

end as winner

from game;

If you’re not doing much SQL, remember that creating such a view is common practice in the relational world.

Running the Aggregates

Now that we have the extra useful view, it’s possible to implement the first MongoDB query in SQL. First, let’s have a look at the MongoDB query:

db.games.aggregate([

{

$match : {

date : {

$gt : ISODate("1999-08-01T00:00:00Z"),

$lt : ISODate("2000-08-01T00:00:00Z")

}

}

},

{

$unwind : '$teams'

},

{

$match : {

'teams.won' : 1

}

},

{

$group : {

_id : '$teams.name',

wins : { $sum : 1 }

}

},

{

$sort : { wins : -1 }

},

{

$limit : 5

}

]);

I don’t know about you, but I have quite a hard time deciphering what that query is actually doing, and when the explanation text talks about using a 6-stage pipeline my understanding is that the application developper has been writing the execution plan of the query here. Let’s ignore the query format itself, as it’s obviously meant to be generated by a tool rather than typed by a human being.

Here’s the same query in SQL, with the result this time:

SELECT abbrev, name, count(*)

FROM winners JOIN team ON team.id = winners.winner

WHERE date > '1999-08-01T00:00:00Z'

AND date < '2000-08-01T00:00:00Z'

GROUP BY winner, abbrev, name

ORDER BY count(*) DESC

LIMIT 5;

abbrev | name | count

--------+------------------------+-------

LAL | Los Angeles Lakers | 67

POR | Portland Trail Blazers | 59

IND | Indiana Pacers | 56

UTA | Utah Jazz | 55

SAS | San Antonio Spurs | 53

(5 rows)

Time: 8.101 ms

What we have here is a pretty basic query using a join, a where clause to restrict the data set we are playing with, a group by clause to define which data to compute the aggregates against, with an order by and a limit clause for presenting the result. To be realistic, if you’ve ever done any SQL at all, then you know how to read that query because you’ve been writing dozens of similar ones.

Here’s, as in the original article, the same query against a much larger data set this time, with all games of the 2000s decade:

SELECT abbrev, name, count(*)

FROM winners join team on team.id = winners.winner

WHERE date > '2000-08-01T00:00:00Z'

AND date < '2010-08-01T00:00:00Z'

GROUP BY winner, abbrev, name

ORDER BY count(*) DESC

LIMIT 5;

abbrev | name | count

--------+--------------------+-------

SAS | San Antonio Spurs | 579

DAL | Dallas Mavericks | 568

LAL | Los Angeles Lakers | 524

PHO | Phoenix Suns | 495

DET | Detroit Pistons | 489

(5 rows)

Time: 24.713 ms

Correlating stats with wins

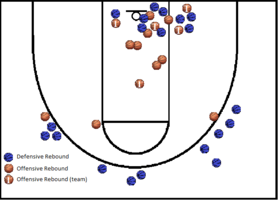

The goal here is to compute how often a team wins when they record more defensive rebounds than their opponent across the entire data set.

To be able to compute the percentage, we have to have a count of all

registered games, of course. Then we are going to count how many times the

winner team registered a greater

team_stats.drb than the loser, and

count

how many times in SQL is usually written as a

sum(case when <condition> then 1 else 0 end), which is what we’re doing here:

select count(*) as games,

sum(case when ws.drb > ls.drb then 1 else 0 end) as drb,

sum(case when ws.drb > ls.drb then 1 else 0 end)::float / count(*) * 100 as pct

from winlose wl

join team w on wl.winner = w.id

join team l on wl.loser = l.id

join team_stats ws on ws.game = wl.id and ws.team = wl.winner

join team_stats ls on ls.game = wl.id and ls.team = wl.loser;

games | drb | pct

-------+-------+------------------

31686 | 22292 | 70.3528372151739

(1 row)

Time: 276.669 ms

We note here than in the original MongoDB article the aggregation query is short of computing the percentage directly, apparently it’s been done in the client tool, maybe using a spreadsheet application or something.

Defensive Rebounds and Total Rebounds Versus Win Percentage

Next, still following on our inspirational article Crunching 30 Years of NBA Data with MongoDB Aggregation, we’re going to compute what percentage of the time a team wins as a function of the number of defensive rebounds they recorded.

I’m not sure I understand what they achieve with averaging ones when a team wins and zero when a team loses, so I couldn’t quite reproduce their result. Here’s an approaching query tho:

with game_stats as (

select t.id, count(*)

from team t join game on game.host = t.id or game.guest = t.id

group by t.id

)

select ts.team, round(avg(drb), 2) as drb,

round(count(*) / gs.count::numeric * 100, 2) as winpct,

count(*) as wins, gs.count as games

from team_stats ts

join game on game.id = ts.game

and game.host = ts.team

and game.host_score > game.guest_score

join game_stats gs on gs.id = ts.team

group by ts.team, gs.count;

team | drb | winpct | wins | games

------+-------+--------+------+-------

4 | 31.59 | 31.46 | 710 | 2257

7 | 32.55 | 31.89 | 720 | 2258

16 | 31.70 | 37.62 | 849 | 2257

I only pasted the first few lines of the result because I’m not sure how to make sense of it, really.

Interesting factoid

What I find most interesting in the following factoid proposed in the MongoDB article is the complete lack of the query you need to run in order to grab the matching data:

An interesting factoid: the team that recorded the fewest defensive rebounds in a win was the 1995-96 Toronto Raptors, who beat the Milwaukee Bucks 93-87 on 12/26/1995 despite recording only 14 defensive rebounds.

When doing the necessary query in SQL, using a

Common Table Expression (the

WITH syntax) and a

Window Function for good measure, we get actually 4

different games with the minimum defensive rebounds in our history of NBA

games, 14:

with stats(game, team, drb, min) as (

select ts.game, ts.team, drb, min(drb) over ()

from team_stats ts

join winners w on w.id = ts.game and w.winner = ts.team

)

select game.date::date,

host.name || ' -- ' || host_score as host,

guest.name || ' -- ' || guest_score as guest,

stats.drb as winner_drb

from stats

join game on game.id = stats.game

join team host on host.id = game.host

join team guest on guest.id = game.guest

where drb = min;

-[ RECORD 1 ]----------------------------

date | 1995-12-26

host | Toronto Raptors -- 93

guest | Milwaukee Bucks -- 87

winner_drb | 14

-[ RECORD 2 ]----------------------------

date | 1996-02-02

host | Golden State Warriors -- 114

guest | Toronto Raptors -- 111

winner_drb | 14

-[ RECORD 3 ]----------------------------

date | 1998-03-31

host | Vancouver Grizzlies -- 101

guest | Dallas Mavericks -- 104

winner_drb | 14

-[ RECORD 4 ]----------------------------

date | 2009-01-14

host | New York Knicks -- 128

guest | Washington Wizards -- 122

winner_drb | 14

Time: 126.276 ms

To understand all there’s to know about window functions, have a look at my article on the topic: Understanding Window Functions.

Total rebounds and wins

The next interesting aside is the following:

As an aside, the Cleveland Cavaliers beat the New York Knicks 101-97 on April 11, 1996, despite recording only 21 total rebounds. Inversely, the San Antonio Spurs lost to the Houston Rockets, 112-110, on January 4, 1992 despite recording 75 total rebounds.

Which we translate in SQL as the following query:

with stats as (

select ts.game, ts.team, trb,

min(trb) over () as min,

max(trb) over () as max

from team_stats ts

join winners w on w.id = ts.game and w.winner = ts.team

)

select game.date::date,

host.name || ' -- ' || host_score as host,

guest.name || ' -- ' || guest_score as guest,

stats.trb as winner_trb

from stats

join game on game.id = stats.game

join team host on host.id = game.host

join team guest on guest.id = game.guest

where trb = min or trb = max;

-[ RECORD 1 ]--------------------------

date | 1995-12-28

host | Dallas Mavericks -- 103

guest | Vancouver Grizzlies -- 101

winner_trb | 76

-[ RECORD 2 ]--------------------------

date | 1996-04-11

host | New York Knicks -- 97

guest | Cleveland Cavaliers -- 101

winner_trb | 21

-[ RECORD 3 ]--------------------------

date | 2007-01-29

host | Utah Jazz -- 115

guest | New Jersey Nets -- 116

winner_trb | 21

Time: 127.771 ms

Again it’s easy enough in SQL to have more details about the aside presented in our source article, and we get a slightly different story.

Conclusion

It’s quite hard for me to appreciate the work done in the MongoDB aggregation framework really, when we’ve been enjoying advanced aggregation and statistics in PostgreSQL for a very long time. With the addition of Window Functions and Aggregate Functions for Statistics it’s possible to implement advanced analysis right into your SQL queries.

PostgreSQL is YeSQL

PostgreSQL is YeSQL

In next PostgreSQL release the set of analytical functions is going to

expand again with the addition of both

Ordered-Set Aggregate Functions (also

known as

inverse distribution functions) and

Hypothetical-Set Aggregate Functions (also known as

WITHIN GROUP).

When the problem you have to solve involves analyzing data, one of the more advanced tooling you can find around certainly is the SQL language, in particular its implementation in PostgreSQL!